< Tensorflow > What is the implementation of GRU in tensorflow

What’s the implementation of GRU cell in tensorflow?

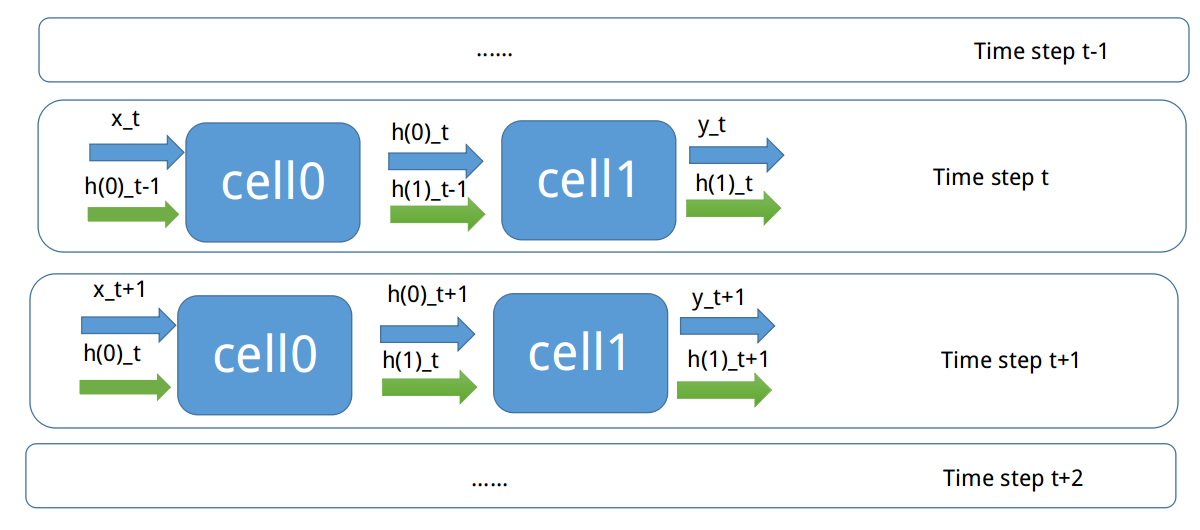

We can use a chart to demonstrate the GRU cell implementation in Tensorflow, and let’s take a two cells GRU for example:

The chart above shows how a two-cells GRU network process sequences at time t and time t+1 in Tensorflow.

x_t is the input sequence at time t. h(0)_t-1 is the hidden state of cell zero at time t-1, while h(1)_t is the hidden state of cell one at time t. y_t is the final output of the GRU network at time t.

So as you can see, we have to maintain two variables h(0)_t-1 and h(1)_h-1 in order to proceed the two cells gru network.

So at the end of each time step, we should copy the memory of h_now to h_prev for every cell.

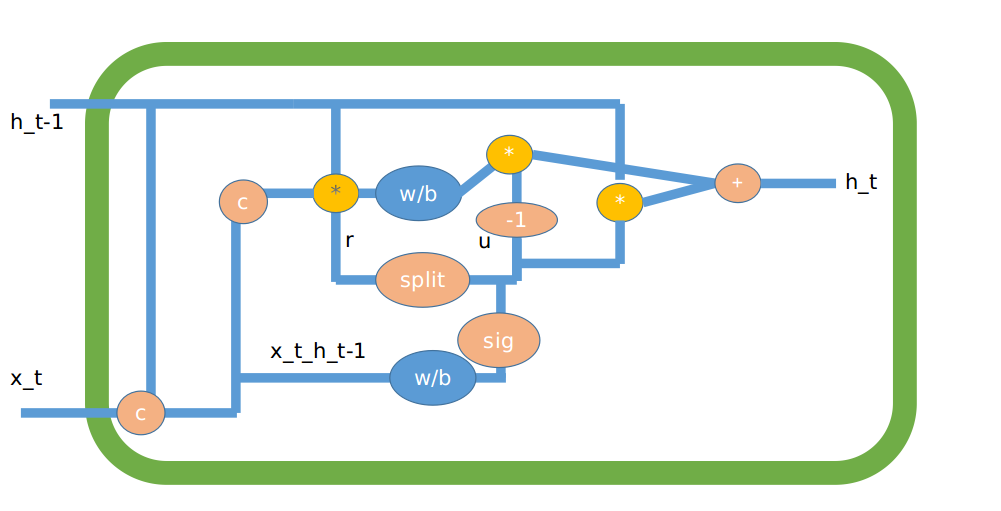

The inner structure of a Gru cell can be demonstrate as the picture below:

In this chart, “c” is concatenation. “w/b” demonstrate for the inner product with learned weights and add bias. “sig” means sigmoid operation.

< Tensorflow > What is the implementation of GRU in tensorflow